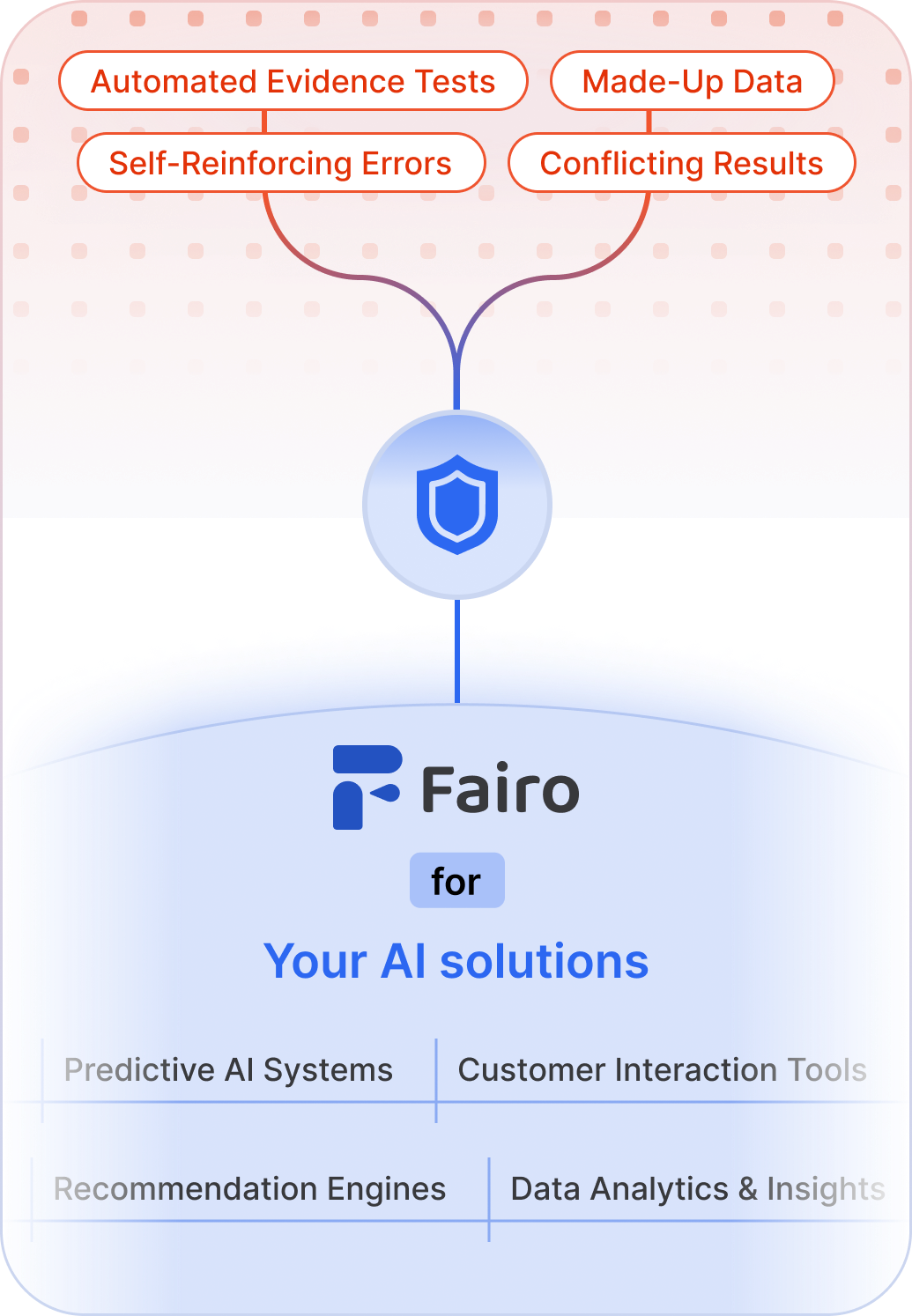

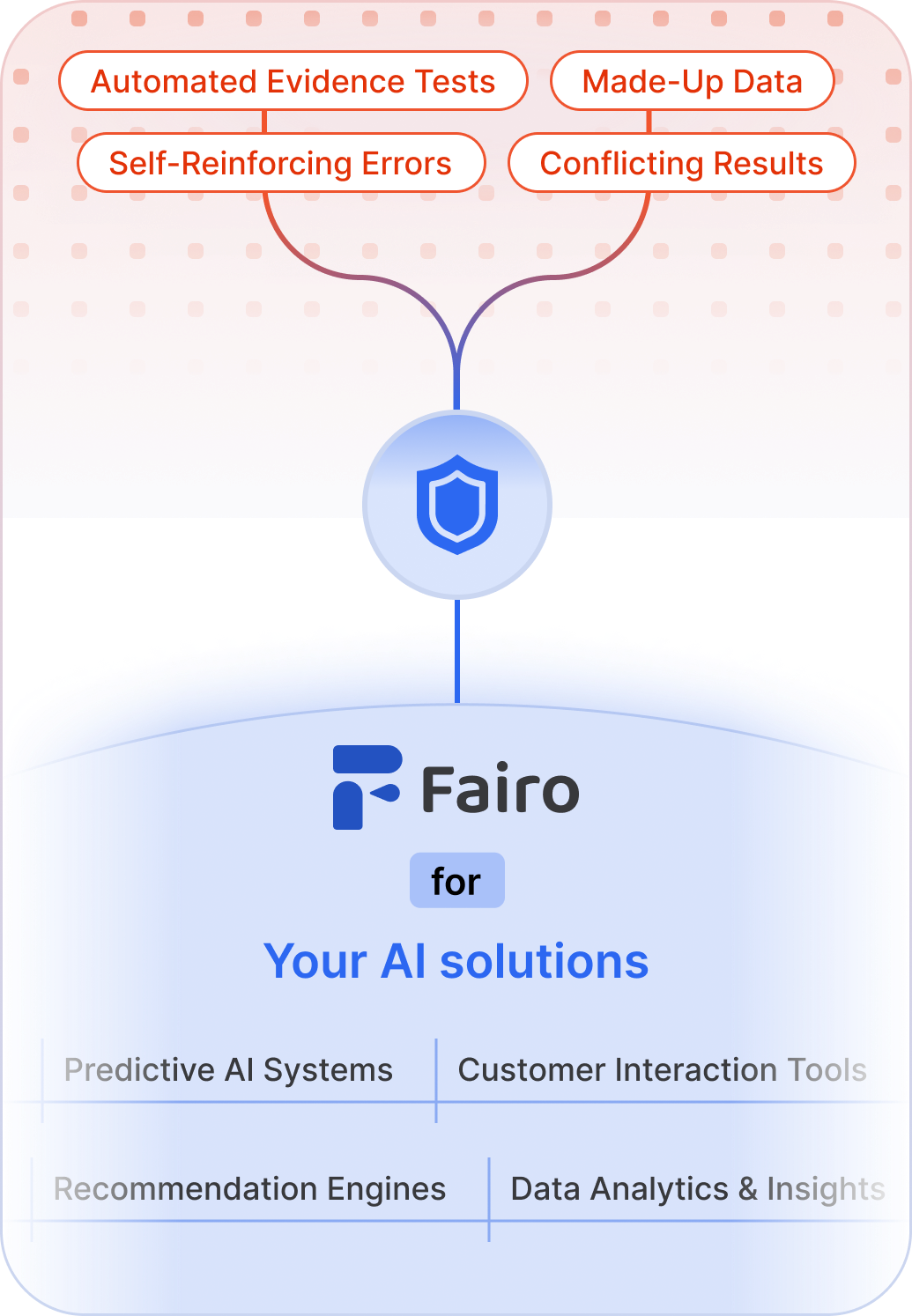

Hallucinations are just a fancy word for a model that is not working. Errors need to be addressed before they become impacts.

AI Hallucinations can lead to impacts affecting your organization's data security, operational, reputational, and financial standing.

Hallucinations can be toxic, biased and dangerous. Preventing harmful AI hallucinations is both a business and and an ethical requirement.

Assess Use Cases and Vendors for Risks

Examine each AI use case and vendor for specific hallucination risks and key issues to remediate - keeping you focused on what matters the most.

Assess Use Cases, Vendors and Risks

Examine each AI use case and vendor for potential risks and impacts to ensure reliable and safe outcomes.

Evaluate Data Quality and Complexity

Assess data for representativeness, complexity, and differences between training and production data to prevent misleading outputs.

Measure and Track Model Performance

Identify and monitor key model metrics to ensure performance aligns with your goals and expected outcomes.

Implement Additional Controls

Set up new processes, metrics, and data controls to continually improve AI reliability and safety.

Monitor and Reevaluate

Regularly review and adjust your AI systems to keep performance consistent and reduce risks.

Evaluate Datasets for Quality

Evaluate data for characteristics like representativeness and complexity. Detect anomalies and differences between training and production environments before impacts materialize.

Test Model Performance

Test your model with evaluations designed to ensure its performance aligns with your goals and expected outcomes. Benchmarked mappings from test results to categorized risks create actionable insights.

Implement Robust Controls

Set up new controls, processes, metrics, and tests to continually improve AI reliability. Tailored suggestions ensure your efforts are focused on highest-impact activities.

Govern the Entire AI Lifecycle

Regularly review and update your AI systems across their entire lifecycle to keep performance consistent, reduce hallucinations, and mitigate risks.

AI hallucinations are a complex problem that require a holistic solution. Prompt engineering is a part of the solution, but there are many other elements. Even if your machine learning platform has the best observability suite on the market, the system doesn't access have the same data Fairo has. Fairo sees the bigger picture and understands your AI systems deeper than any other platform. We look at all aspects of the system, from training data to intended uses and impact parties. Through this oversight, we are able to provide the required recommendations to solve the problem, regardless of where in the AI lifecycle they fall.

Fairo adds value to your existing machine learning platform by brining data and insights to the table from other parts of your organization. For example, many machine learning providers help you run metrics, but don't tell you which ones to run, how often to run them, and when to run them. Fairo takes care of all this, and more. By integrating Fairo into your machine learning platform, you will truly unlock value from it in ways that you hadn't imagined possible.

AI technology is changing rapidly, keeping up requires a flexible technology model and understanding of foundational concepts. Our technology architecture allows us to map concepts across AI Architectures and risk taxonomies, without having to re-build the underlying repository of data. When technology changes, we are able to re-map your data quickly, discover if any gaps exist, and determine how to adapt efficiently.

Fairo implements rigorous IT and security controls. We are SOC2 compliant, and have robust data processing agreements and security policies in place for our customers. For more information on how we protect your data, visit our trust center.